This topic talks about Perspective camera and how to set appropriate viewing frustum for massive distance environment.

Perspective camera is the projection mode which is designed to mimic the way human sees things in the real world. This is the most common projection mode used for rendering a 3D scene. - three.js

If you look at the perspective camera constructor in three.js documentation, it would be like this:

new THREE.PerspectiveCamera( fov, aspect, near, far )

which are:

fov - camera field of view; aspect- camera aspect ratio; near - camera near plane and far - camera far plane.

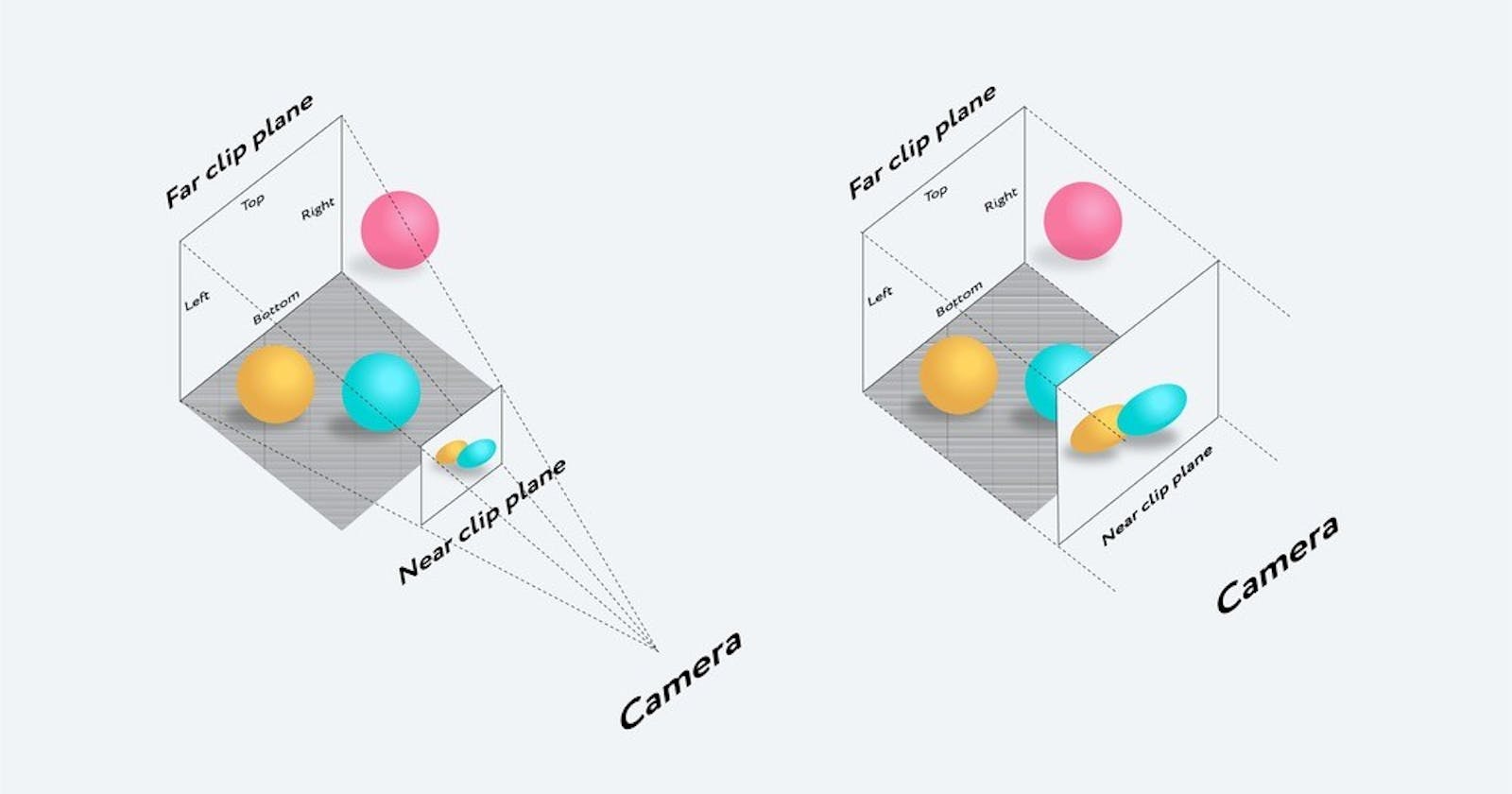

Together these define the camera's viewing frustum - the region of 3D scene will be rendered and appeared in the screen.

For example:

const camera = new THREE.PerspectiveCamera( 45, width / height, 1, 1000 );

Basically, you can set fov, near, far with any range you'd like. For instance, adjust fov up to around 10 or 20 and you'll easily see the front of objects or actually everything is zoomed in to the screen, whereas everything is your scene is moved far away if you set fov is around 90 of 100.

How about near and far?

Certainly, you can also set any value of near and far planes, but if the near-far range very large then you will have more z-fighting up close, whereas the range will break your scene if it's too small.

Ideally, if you set near = 0.01 then the far should be 1000, or near = 1 then you can go further out like far = 10000.

However, if you really need something like massive distances, you can go with near = 0.0000000001 and far = 10000000000000. But in this case, you may need another option to configure your WebGLRenderer.

Why?

The reason is your GPU only has so much precision to decide if something is in front or behind something else. That precision is spread out between

nearandfar.Worse, by default the precision close the camera is detailed and the precision far from the camera is coarse. The units start with near and slowly expand as they approach far. - threejsfundamentals.org

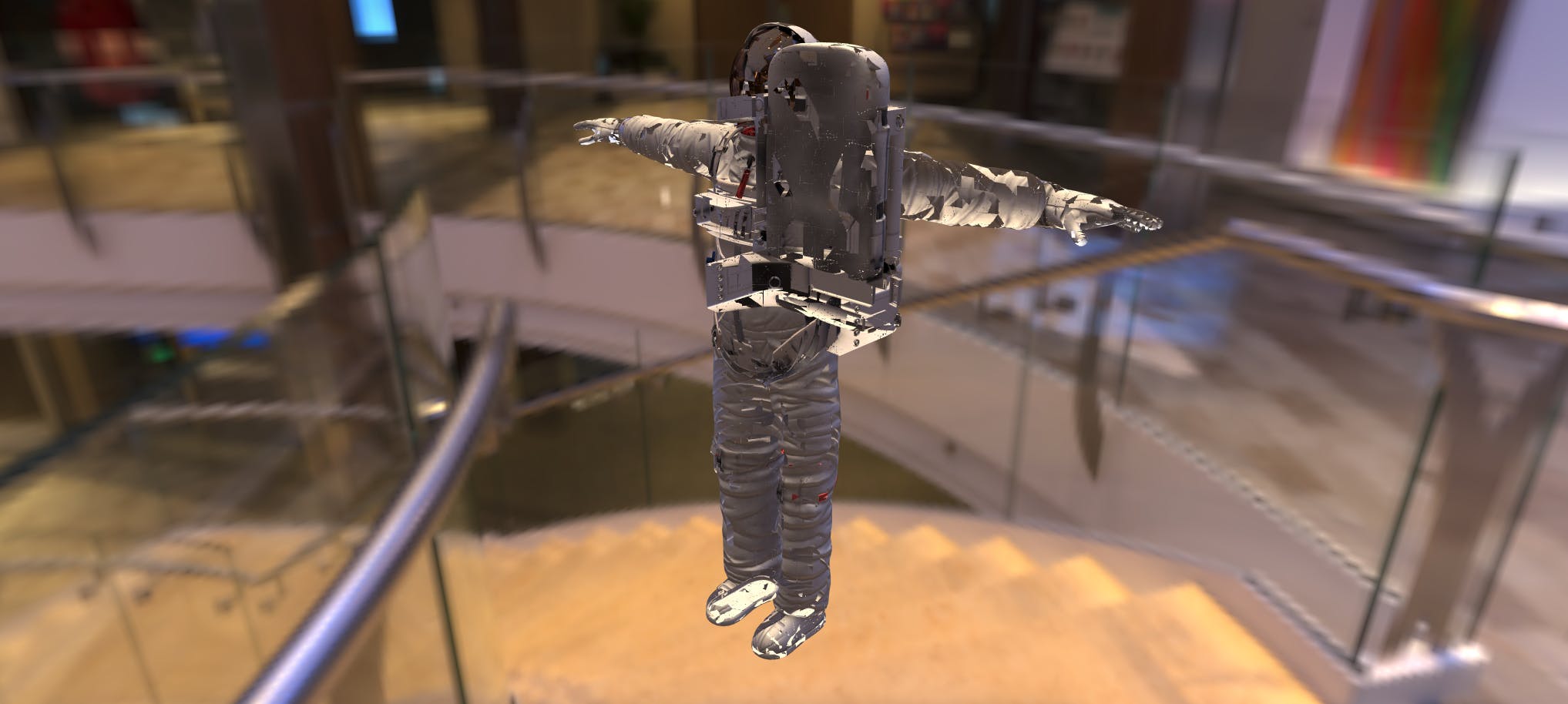

In order to illustrate this case, we have a simple scene with a 3D model and the camera, renderer are setup like this:

// camera

this.camera = new THREE.PerspectiveCamera(

45,

window.innerWidth / window.innerHeight,

0.00000001,

10000

);

this.camera.position.set(10, 6, 10);

// renderer

this.renderer = new THREE.WebGLRenderer({ antialias: true });

this.renderer.setPixelRatio(window.devicePixelRatio);

this.renderer.setSize(window.innerWidth, window.innerHeight);

this.renderer.physicallyCorrectLights = true;

this.renderer.outputEncoding = THREE.sRGBEncoding;

What do you think will happen?

Something went wrong with the 3D model, as you can see the textures are all broken. This is an example of z fighting where the GPU on your computer does not have enough precision to decide which pixels are in front and which pixels are behind.

There is one solution is that you need to tell three.js use a different method to compute which pixels are in front and which are behind. We can do that by enabling logarithmicDepthBuffer in WebGLRenderer constructor.

So our renderer now looks like this:

// renderer

this.renderer = new THREE.WebGLRenderer({

antialias: true,

logarithmicDepthBuffer: true

});

// ...

Check the result and it should work...

The logarithmicDepthBuffer clearly does the trick but it's not always recommended to use since it can be signification slower than the standard solution, especially in most of mobile devices.

That means you should always adjust the near and far to fits your needs. For now, just be aware there is still an alternative option which you can give it a try if you wanna draw a giant scene with a massive distance without breaking any object in your scene.

Hope this helps!